java 中我们使用序列化和反序列化主要目的是将对象和字节序列进行相互转换。序列化的作用是什么呢?1,将对象转换成字节序列后进行落盘存储到文件 2,将对象序列化后进行网络传输。然而对于hive 的序列化和反序列化是将hive 表中每一列的值与字节码序列进行转换。hive Serde 包含Serialize和Deserialize 两个功能,比如查询select * from tb_example, Deserialize 是将存储在hdfs中的字节码解析出来成为可读;load 加载数据时,Serialize 是将数据序列化后存储到hdfs 系统中。

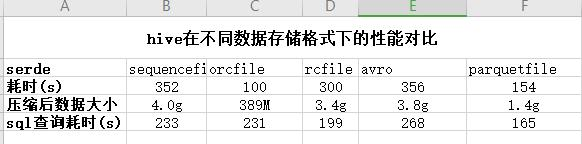

针对量级比较大的数据,hive 官方支持几种数据压缩格式对数据进行压缩保存,然而不同的数据格式的压缩效率和查询效率等性能却有所差异。以下面的例子对比下(日志文件3.6G,约3700w数据)hive表以不同的数据格式存储的性能表现。

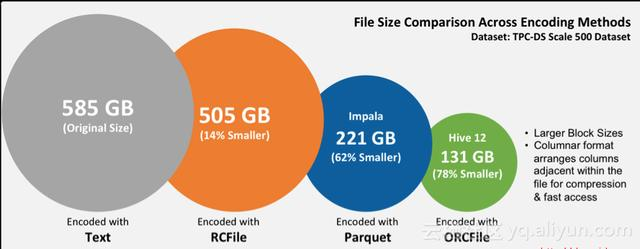

不同数据格式的压缩比(图片来自网络)

- 创建普通的hive表 tb_iptv_data 作为基础数据表,将数据load 到表中

hive 默认是使用 org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe create table if not exists tb_iptv_data(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format DELIMITED FIELDS TERMINATED BY '|'LINES TERMINATED BY '\n';//再创建一张sequencefile 格式的表create table if not exists tb_iptv_data_sequence(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format DELIMITED FIELDS TERMINATED BY '|'LINES TERMINATED BY '\n' stored as sequencefile as select * from tb_iptv_data;//用时Stage-Stage-1: Map: 15 Cumulative CPU: 306.47 sec HDFS Read: 3868909814 HDFS Write: 4310770586 SUCCESSTotal MapReduce CPU Time Spent: 5 minutes 6 seconds 470 msecOKTime taken: seconds- 依据基础数据表创建其他数据格式的表,分别是 orcfile/rcfile/avro/parquetfile,数据表建好后通过执行sql对比其执行效率(集群硬件基础是24c32G)

SELECT count(*) from (SELECT rscode,sbtid,count(*) FROM tb_iptv_data GROUP BY rscode,sbtid)torg.apache.hadoop.hive.ql.io.orc.OrcSerde 格式create table if not exists tb_iptv_data_orc(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format DELIMITED FIELDS TERMINATED BY '|'LINES TERMINATED BY '\n' stored as orc;org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe 格式create table if not exists tb_iptv_data_rc(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format DELIMITED FIELDS TERMINATED BY '|'LINES TERMINATED BY '\n' stored as rcfile as select * from tb_iptv_data;//建表耗时Stage-Stage-1: Map: 15 Cumulative CPU: 255.19 sec HDFS Read: 3868912709 HDFS Write: 3662099227 SUCCESSTotal MapReduce CPU Time Spent: 4 minutes 15 seconds 190 msecOKTime taken: 300.767 seconds//sql 执行耗时6711120Time taken: 199.498 seconds, Fetched: 1 row(s)org.apache.hadoop.hive.serde2.avro.AvroSerDe 格式create table if not exists tb_iptv_data_avro(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format DELIMITED FIELDS TERMINATED BY '|'LINES TERMINATED BY '\n' stored as avro as select * from tb_iptv_data;//建表耗时Stage-Stage-1: Map: 15 Cumulative CPU: 550.79 sec HDFS Read: 3868919834 HDFS Write: 4086779757 SUCCESSTotal MapReduce CPU Time Spent: 9 minutes 10 seconds 790 msecOKTime taken: 356.338 seconds//sql 执行耗时6711120Time taken: 268.299 seconds, Fetched: 1 row(s)org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe 格式create table if not exists tb_iptv_data_parquet(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format DELIMITED FIELDS TERMINATED BY '|'LINES TERMINATED BY '\n' stored as avro;TERMINATED BY '|'LINES TERMINATED BY '\n' stored as parquetfile;org.apache.hive.hcatalog.data.JsonSerDe 格式create table if not exists tb_iptv_data_json(expodate string,addcode string,sbtid string,rscode string,rsname string,scid string)row format Serde "org.apache.hive.hcatalog.data.JsonSerDe";

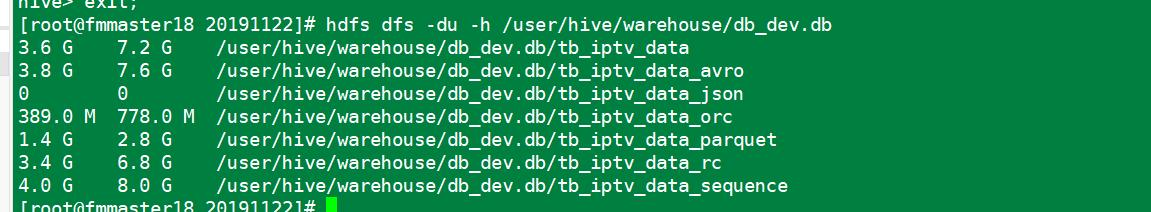

各表的数据大小

- 总结

- 数据存储时的压缩耗时 以orc 格式效率最高,其次是qarquet,而 squence/rcfile/avro 的效率较慢

- 数据压缩后大小,以orcfile 格式的压缩比最优,parquet 次之

- sql 查询性能的对比可以看出,parquet 性能最优,其次是rcfile格式(此处的对比结果与网上其他文章中的结论不符合,同时也与orc 官网介绍的结论不一样是什么情况?这个问题研究中)

正文完