最后的解决办法就是用CudnnLSTM替换原来的LSTM.

我发现跑的模型,有的时候GPU利用率比较低。经过对模型的拆解运行,最后确定是LSTM拉低了GPU利用率。

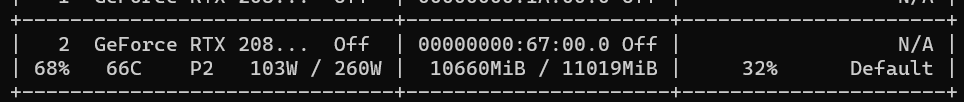

老的利用率截图

新的利用率接近100%

我最后重新写的代码见附录

原来的代码见附录,我找了一番资料后,感觉写得也没有问题。

- tensorflow如何高效利用gpu进行rnn

https://www.zhihu.com/question/299843655 - 创建双向LSTM

https://riptutorial.com/zh-CN/tensorflow/example/17004/%E5%88%9B%E5%BB%BA%E5%8F%8C%E5%90%91lstm

搜索一番后,发现可以用CuDNNLSTM

- https://blog.csdn.net/ssswill/article/details/89889395

- https://stackoverflow.com/questions/49987261/what-is-the-difference-between-cudnnlstm-and-lstm-in-keras

- 官方文档

https://www.tensorflow.org/versions/r1.15/api_docs/python/tf/contrib/cudnn_rnn/CudnnLSTM - 找到使用demo

https://gist.github.com/protoget/9b45881f23c96e201a90581c8f4b692d

这个代码我贴附录了,实际使用时遇到Fail to find the dnn implementation.

https://github.com/tensorflow/tensorflow/issues/20067

https://github.com/keras-team/keras/issues/10634

但我指定GPU后就没有这个问题了

如果遇到

tensorflow.python.framework.errors_impl.UnknownError: Fail tofind the dnn implementation.[[node cudnn_lstm_1/CudnnRNNCanonicalToParams(defined at lstm.py:596)]]看一下用的GPU是不是有人用了,如果是别人用了的(即便还有显存也不行),换一个新的GPU。

附

改写的版本

bilstm= my_rnn.CudnnLSTM(

num_layers=1, num_units=parent_hidden_size//2,

direction='bidirectional',

dropout=0.3,

dtype=tf.float32)# 省略数据变换

bilstm.build(inputsPath.get_shape())# [time_len, batch_size, input_size] -> [time_len, batch_size, num_dirs * num_units]

my_rnn_outputs, _= bilstm(inputsPath, training=is_training)# 只取最后一个

root_path_output= my_rnn_outputs[-1,:,:]# 省略其他的操作原来的双向LSTM

defencode_par_path(embedding_inputs, parent_hidden_size, rnn_layers=1, keep_prob=0.7, bi_lstm=True):with tf.variable_scope('path_encoder')as encoder_scope:defbuild_cell(hidden_size):defget_single_cell(hidden_size, keep_prob):

cell= tf.nn.rnn_cell.BasicLSTMCell(hidden_size)if keep_prob<1:

cell= tf.nn.rnn_cell.DropoutWrapper(cell, output_keep_prob=keep_prob)return cell

cell= tf.nn.rnn_cell.MultiRNNCell([get_single_cell(hidden_size, keep_prob)for _inrange(rnn_layers)])return cellifnot bi_lstm:

encoder_cell= build_cell(parent_hidden_size)

encoder_outputs, encoder_final_state= tf.nn.dynamic_rnn(

encoder_cell, embedding_inputs,# sequence_length=self.par_seq_len,

dtype=tf.float32, scope=encoder_scope)return encoder_outputs, encoder_final_stateelse:

encoder_cell= build_cell(parent_hidden_size/2)

bw_encoder_cell= build_cell(parent_hidden_size/2)

encoder_outputs,(fw_state, bw_state)= tf.nn.bidirectional_dynamic_rnn(

encoder_cell, bw_encoder_cell,

embedding_inputs,# sequence_length=self.par_seq_len,

dtype=tf.float32, scope=encoder_scope)

state=[]for iinrange(rnn_layers):

fs= fw_state[i]

bs= bw_state[i]

encoder_final_state_c= tf.concat((fs.c, bs.c),1)

encoder_final_state_h= tf.concat((fs.h, bs.h),1)

encoder_final_state= tf.nn.rnn_cell.LSTMStateTuple(

c=encoder_final_state_c,

h=encoder_final_state_h)

state.append(encoder_final_state)

encoder_final_state=tuple(state)

encoder_outputs= tf.concat([encoder_outputs[0], encoder_outputs[1]],-1)return encoder_outputs, encoder_final_state# Path2root

root_path=[]with tf.variable_scope("RNN"):for time_stepinrange(num_steps):if time_step>0:

tf.get_variable_scope().reuse_variables()

path_output, path_state= encode_par_path(

inputsPath[:, time_step,:,:], parent_hidden_size)# [bz, parent_len, hidden]

root_path.append(path_output[:,-1,:])# [seq_len, bz, hidden]

root_path_output= tf.stack(axis=0, values=root_path)# [bz, seq_len, hidden]使用CudnnLSTM

from __future__import absolute_importfrom __future__import divisionfrom __future__import print_functionimport numpyas npimport tensorflowas tf

shape=[2,2,2]

n_cell_dim=2definit_vars(sess):

sess.run(tf.global_variables_initializer())deftrain_graph():with tf.Graph().as_default(), tf.device('/gpu:0'):with tf.Session()as sess:

is_training=True

inputs= tf.random_uniform(shape, dtype=tf.float32)

lstm= tf.contrib.cudnn_rnn.CudnnLSTM(

num_layers=1,

num_units=n_cell_dim,

direction='bidirectional',

dtype=tf.float32)

lstm.build(inputs.get_shape())

outputs, output_states= lstm(inputs, training=is_training)with tf.device('/cpu:0'):

saver= tf.train.Saver()

init_vars(sess)

saver.save(sess,'/tmp/model')definf_graph():with tf.Graph().as_default(), tf.device('/cpu:0'):with tf.Session()as sess:

single_cell=lambda: tf.contrib.cudnn_rnn.CudnnCompatibleLSTMCell(

n_cell_dim, reuse=tf.get_variable_scope().reuse)

inputs= tf.random_uniform(shape, dtype=tf.float32)

lstm_fw_cell=[single_cell()for _inrange(1)]

lstm_bw_cell=[single_cell()for _inrange(1)](outputs, output_state_fw,

output_state_bw)= tf.contrib.rnn.stack_bidirectional_dynamic_rnn(

lstm_fw_cell,

lstm_bw_cell,

inputs,

dtype=tf.float32,

time_major=True)

saver= tf.train.Saver()

saver.restore(sess,'/tmp/model')print(sess.run(outputs))defmain(unused_argv):

train_graph()

inf_graph()if __name__=='__main__':

tf.app.run(main)一个跑mnist的例子

import tensorflowas tfimport numpyas npfrom tqdmimport tqdmfrom tensorflow.examples.tutorials.mnistimport input_dataimport os

os.environ['CUDA_VISIBLE_DEVICES']="0"

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

mnist= input_data.read_data_sets('/tmp/data', one_hot=True)

element_size=28

time_steps=28

num_classes=10

batch_size=64

hidden_layer_size=128

LOG_DIR='culstm'

_inputs= tf.placeholder(tf.float32, shape=[batch_size, time_steps, element_size], name='inputs')

y= tf.placeholder(tf.float32, shape=[None, num_classes], name='labels')with tf.name_scope('rnn'):

rnn_input= tf.transpose(_inputs,[1,0,2])from tensorflow.contrib.cudnn_rnnimport CudnnLSTM

is_training=True

lstm= tf.contrib.cudnn_rnn.CudnnLSTM(

num_layers=1, num_units=hidden_layer_size,# UnknownError (see above for traceback): CUDNN_STATUS_EXECUTION_FAILED# dropout=0.3,

dtype=tf.float32)

lstm.build(rnn_input.get_shape())# [time_len, batch_size, input_size] -> [time_len, batch_size, num_dirs * num_units]

outputs, _= lstm(rnn_input, training=is_training)

output= outputs[-1]with tf.name_scope('fc'):

w= tf.Variable(tf.truncated_normal([hidden_layer_size, num_classes], mean=0, stddev=0.01), dtype=tf.float32)

b= tf.Variable(tf.truncated_normal([num_classes], mean=0, stddev=0.01), dtype=tf.float32)

y_pred= tf.matmul(output, w)+ b

loss= tf.nn.softmax_cross_entropy_with_logits(logits=y_pred, labels=y)

loss= tf.reduce_mean(loss)

optimizer= tf.train.RMSPropOptimizer(0.001,0.9)

train= optimizer.minimize(loss)

correct_prediction= tf.equal(tf.argmax(y_pred,1), tf.argmax(y,1))

accuracy= tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

test_data= mnist.test.images[:batch_size].reshape(-1, time_steps, element_size)

test_label= mnist.test.labels[:batch_size]with tf.Session()as sess:

sess.run(tf.global_variables_initializer())

pbar= tqdm(range(10000))

pbar.set_description(

f'Train loss: , '

f'accuracy '

f'Test loss: , '

f'accuracy ')print()for iin pbar:

batch_x, batch_y= mnist.train.next_batch(batch_size)

batch_x= batch_x.reshape(-1, time_steps, element_size)

_, loss_np, accuracy_np= sess.run([train, loss, accuracy], feed_dict={_inputs: batch_x, y: batch_y})if i%100==99:

test_loss_np, test_accuracy_np= sess.run([loss, accuracy], feed_dict={_inputs: test_data, y: test_label})

pbar.set_description(

f'Train loss: {loss_np:.4f}, '

f'accuracy {accuracy_np:.4f} '

f'Test loss: {test_loss_np:.4f}, '

f'accuracy {test_accuracy_np:.4f}')