Docker安装Kafka

1.安装zookeeper

docker run -d --name zookeeper -p 2181:2181 -v /etc/localtime:/etc/localtime wurstmeister/zookeeper:latest

2.安装Kafka

docker run -d --name kafka -p 9092:9092 -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092 -e KAFKA_LISTENERS=PLAINTEXT://kafka:9092 -t wurstmeister/kafka:latest

-e KAFKA_BROKER_ID=0 在kafka集群中,每个kafka都有一个BROKER_ID来区分自己

-e KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181/kafka 配置zookeeper管理kafka的路径zookeeper:2181/kafka

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092 把kafka的地址端口注册给zookeeper

-e KAFKA_LISTENERS=PLAINTEXT://kafka:9092 配置kafka的监听端口

-v /etc/localtime:/etc/localtime 容器时间同步虚拟机的时间

Docker Compose安装Kafka

1.编写docker-compose.yml脚本

version: "3.2"

services:

zookeeper:

image: zookeeper:latest

restart: always

#network_mode: "host"

container_name: zookeeper

ports:

- "2181:2181"

expose:

- "2181"

environment:

- ZOO_MY_ID=1

kafka:

image: wurstmeister/kafka:latest

restart: always

#network_mode: "host"

container_name: kafka

environment:

- KAFKA_BROKER_ID=1

- KAFKA_LISTENERS=PLAINTEXT://kafka:9092

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_MESSAGE_MAX_BYTES=2000000

ports:

- "9092:9092"

depends_on:

- zookeeper

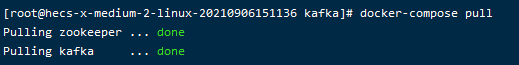

2.拉取镜像

docker-compose pull

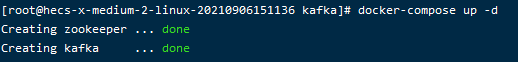

3.构建镜像、创建容器并启动

docker-compose up -d

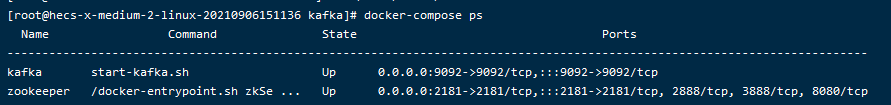

4.查看Kafka是否启动成功

docker-compose ps

配置Kafka server.properties

1.进入容器

docker exec -it kafka /bin/bash

2.切换到config目录(Kafka配置文件路径:opt/kafka/config)

cd opt/kafka/config

3.编辑server.properties文件

vi server.properties

// 1.增加advertised.host.name如果设置,则就作为broker 的hostname发往producer、consumers以及其他brokers

advertised.host.name=宿主机IP

// 2.设置Kafka自动创建topic

auto.create.topics.enable=true

// 3.设置允许删除topic

delete.topic.enable=true

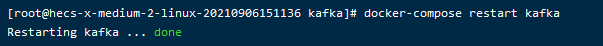

4.退出容器,重启Kafka

docker-compose restart kafka

Kafka常用命令

Kafka查看所有topic

kafka-topics.sh --list --zookeeper 172.16.2.89:2181

kafka创建topic

kafka-topics.sh --create --topic TRS_Notify --zookeeper 172.16.2.89:2181 --config max.message.bytes=1280000 --config flush.messages=1 --partitions 1 --replication-factor 1

//--topic后面的test0是topic的名称--zookeeper应该和server.properties文件中的zookeeper.connect一样--config指定当前topic上有效的参数值--partitions指定topic的partition数量,如果不指定该数量,默认是server.properties文件中的num.partitions配置值--replication-factor指定每个partition的副本个数,默认1个

启动Kafka生产者

kafka-console-producer.sh --broker-list 172.16.2.89:9092 --topic TRS_Notify

启动Kafka消费者

#从头开始$KAFKA_HOME/bin/kafka-console-consumer.sh --bootstrap-server 172.16.2.89:9092 --topic TRS_Notify --from-beginning

#从尾部开始$KAFKA_HOME/bin/kafka-console-consumer.sh --bootstrap-server 172.16.2.89:9092 --topic TRS_Notify --offset latest

#指定分区$KAFKA_HOME/bin/kafka-console-consumer.sh --bootstrap-server 172.16.2.89:9092 --topic TRS_Notify --offset latest --partition 1

#取指定个数$KAFKA_HOME/bin/kafka-console-consumer.sh --bootstrap-server 172.16.2.89:9092 --topic TRS_Notify --offset latest --partition 1 --max-messages 1

删除kafka的topic

kafka-topics.sh --delete --zookeeper 172.16.2.89:2181 --topic TRS_Notify

#删除zookeeper中该topic相关的目录命令:rm -r /kafka/config/topics/TRS_Notify rm -r /kafka/brokers/topics/TRS_Notify