历史文章:

1、python底层实现KNN:https://blog.csdn.net/cccccyyyyy12345678/article/details/117911220

2、Python底层实现决策树:https://blog.csdn.net/cccccyyyyy12345678/article/details/118389088

1、导入数据

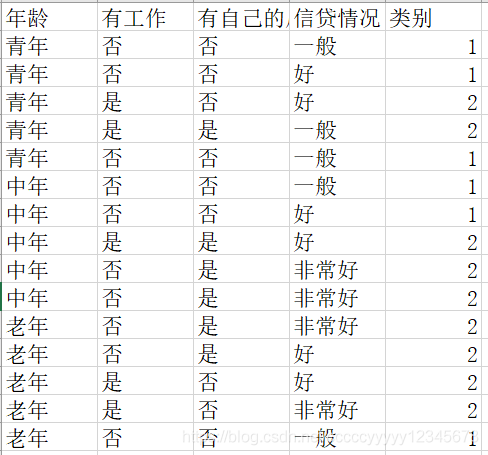

使用的数据和决策树数据相同,使用贷款数据,详情如下:

导入数据步骤和之前文章提到的KNN,决策树相同,借助python自带的pandas库导入数据。

def read_xlsx(csv_path):

data = pd.read_excel(csv_path)

print(data)

return data2、划分数据集

def train_test_split(data, test_size=0.2, random_state=None):

index = data.shape[0]

# 设置随机种子,当随机种子非空时,将锁定随机数

if random_state:

np.random.seed(random_state)

# 将样本集的索引值进行随机打乱

# permutation随机生成0-len(data)随机序列

shuffle_indexs = np.random.permutation(index)

# 提取位于样本集中20%的那个索引值

test_size = int(index * test_size)

# 将随机打乱的20%的索引值赋值给测试索引

test_indexs = shuffle_indexs[:test_size]

# 将随机打乱的80%的索引值赋值给训练索引

train_indexs = shuffle_indexs[test_size:]

# 根据索引提取训练集和测试集

train = data.iloc[train_indexs]

test = data.iloc[test_indexs]

return train, test3、计算类别标签的数量和比例

def calculate(data):

y = data[data.columns[-1]]

n = len(y)

count_y = {}

pi_y = {}

for i, j in y.value_counts().items():

count_y[i] = j

pi_y[i] = j/n

return count_y, pi_ycount_y是类别标签各自的数量,pi_y是类别标签各自的比例,均以字典形式保存。

4、标签选择

4.1 朴素贝叶斯

def testcalculate(train,test): #test = ["青年","否","否","一般"]

count_y, pi_y = calculate(train)

pi_list = []

ally = pd.unique(train.iloc[:, -1])

for y in ally:

pi_x = 1

features = list(train.columns[:-1])

for i in range(len(features)):

df = train[train[features[i]] == test[i]]

df = df[df.iloc[:, -1] == y]

pi_x = pi_x * len(df) / count_y[y]

# print(pi_x) #y=1条件下x所有特征乘积

pi = pi_y[y] * pi_x

pi_list.append(pi)

# print(ally)

# print(pi_list)

pi_list = np.array(pi_list)

index = np.argsort(-pi_list)

label = ally[index[0]]

return label从测试集的条件属性出发计算先验概率,此方法逻辑上较为简单,但是数据量较大时运行会比较慢,因为每次都要重新计算概率,而不是从计算好的结果中抽取想要的值。

4.2 拉普拉斯修正

训练集中未出现的属性值在计算条件概率时分子为零,此时该属性会被抹去,因此常用拉普拉斯平滑系数来修正条件概率。

def Lapcalculate(train,test,lap): #test = ["青年","否","否","一般"]

count_y, pi_y = calculate(train)

pi_list = []

ally = pd.unique(train.iloc[:, -1])

for y in ally:

pi_x = 1

features = list(train.columns[:-1])

for i in range(len(features)):

ni = pd.unique(train[features[i]]) #第i个属性可能的取值数

df = train[train[features[i]] == test[i]]

df = df[df.iloc[:, -1] == y]

pi_x = pi_x * (len(df)+lap) / (count_y[y]+len(ni))

# print(pi_x) #y=1条件下x所有特征乘积

pi = pi_y[y] * pi_x

pi_list.append(pi)

pi_list = np.array(pi_list)

index = np.argsort(-pi_list)

label = ally[index[0]]

print(label)

return label5、计算准确率

def accuracy(train,test):

correct = 0

for i in range(len(test)):

tiaojian = test.iloc[i, :-1].values

label = testcalculate(train, tiaojian)

if test.iloc[[i], -1].values == label:

correct += 1

accuracy = (correct / float(len(test))) * 100.0

print("Accuracy:", accuracy, "%")

return accuracy总结

贝叶斯方法中用到的很多计算方法和决策树相通,本质上都是数数。

完整代码已上传GitHub:https://github.com/chenyi369/Bayes