简书地址

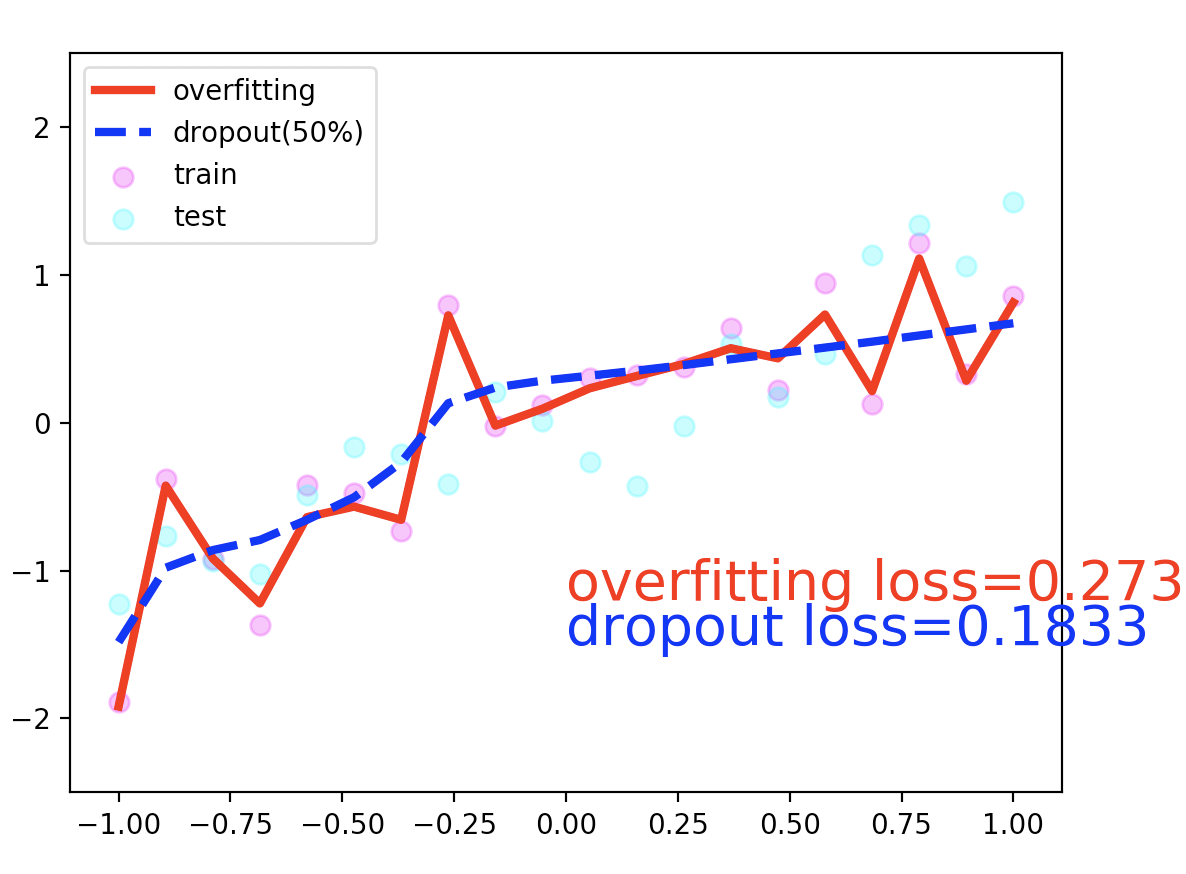

import torchfrom torch.autogradimport Variableimport matplotlib.pyplotas plttorch.manual_seed(1)N_SAMPLES =20N_HIDDEN =300# training datax = torch.unsqueeze(torch.linspace(-1,1,N_SAMPLES),1)y = x +0.3 * torch.normal(torch.zeros(N_SAMPLES,1), torch.ones(N_SAMPLES,1))x, y =Variable(x),Variable(y)# test datatest_x = torch.unsqueeze(torch.linspace(-1,1,N_SAMPLES),1)test_y = test_x +0.3 * torch.normal(torch.zeros(N_SAMPLES,1), torch.ones(N_SAMPLES,1))test_x =Variable(test_x, volatile=True)test_y =Variable(test_y, volatile=True)# show data# plt.scatter(x.data.numpy(), y.data.numpy(), c='magenta', s=50, alpha=0.5, label='train')# plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c='cyan', s=50, alpha=0.5, label='test')# plt.legend(loc='upper left')# plt.ylim((-2.5, 2.5))# plt.show()net_overfitting = torch.nn.Sequential(

torch.nn.Linear(1,N_HIDDEN),

torch.nn.ReLU(),

torch.nn.Linear(N_HIDDEN,N_HIDDEN),

torch.nn.ReLU(),

torch.nn.Linear(N_HIDDEN,1),

)net_dropped = torch.nn.Sequential(

torch.nn.Linear(1,N_HIDDEN),

torch.nn.Dropout(0.5),

torch.nn.ReLU(),

torch.nn.Linear(N_HIDDEN,N_HIDDEN),

torch.nn.Dropout(0.5),

torch.nn.ReLU(),

torch.nn.Linear(N_HIDDEN,1),

)print(net_overfitting)print(net_dropped)optimizer_ofit = torch.optim.Adam(

net_overfitting.parameters(),

lr =0.01,

)optimizer_drop = torch.optim.Adam(

net_dropped.parameters(),

lr =0.01,

)loss_func = torch.nn.MSELoss()plt.ion()for tin range(500):

pred_ofit = net_overfitting(x)

pred_drop = net_dropped(x)

loss_ofit = loss_func(pred_ofit, y)

loss_drop = loss_func(pred_drop, y)

optimizer_ofit.zero_grad()

optimizer_drop.zero_grad()

loss_ofit.backward()

loss_drop.backward()

optimizer_ofit.step()

optimizer_drop.step()if t %10 ==0:

net_overfitting.eval()

net_dropped.eval()

plt.cla()

test_pred_ofit = net_overfitting(test_x)

test_pred_drop = net_dropped(test_x)

plt.scatter(x.data.numpy(), y.data.numpy(), c='magenta', s=50, alpha=0.3, label='train')

plt.scatter(test_x.data.numpy(), test_y.data.numpy(), c='cyan', s=50, alpha=0.3, label='test')

plt.plot(test_x.data.numpy(), test_pred_ofit.data.numpy(), 'r-', lw=3, label='overfitting')

plt.plot(test_x.data.numpy(), test_pred_drop.data.numpy(), 'b

plt.text(0, -1.2, 'overfitting loss=%.4f' % loss_func(test_pred_ofit, test_y).data[0], fontdict={'size': 20, 'color': 'red'})

plt.text(0, -1.5, 'dropout loss=%.4f' % loss_func(test_pred_drop, test_y).data[0], fontdict={'size': 20, 'color': 'blue'})

plt.legend(loc='upper left'); plt.ylim((-2.5,2.5));plt.pause(0.1)

net_overfitting.train()

net_dropped.train()plt.ioff()plt.show()