import torch

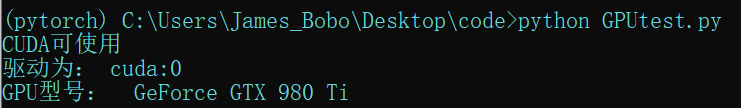

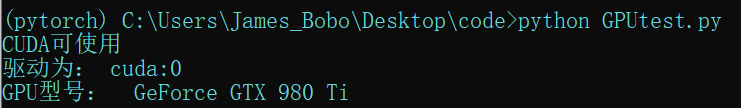

flag= torch.cuda.is_available()if flag:print("CUDA可使用")else:print("CUDA不可用")

ngpu=1

device= torch.device("cuda:0"if(torch.cuda.is_available()and ngpu>0)else"cpu")print("驱动为:",device)print("GPU型号: ",torch.cuda.get_device_name(0))

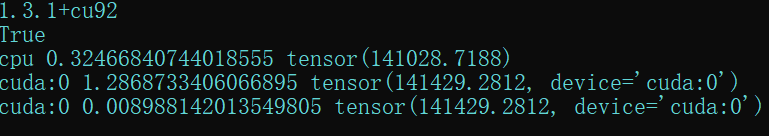

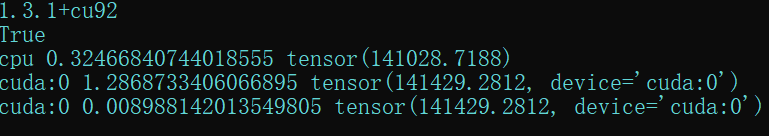

import torchimport timeprint(torch.__version__)print(torch.cuda.is_available())

a= torch.randn(10000,1000)

b= torch.randn(1000,2000)

t0= time.time()

c= torch.matmul(a, b)

t1= time.time()print(a.device, t1- t0, c.norm(2))

device= torch.device('cuda')

a= a.to(device)

b= b.to(device)

t0= time.time()

c= torch.matmul(a, b)

t2= time.time()print(a.device, t2- t0, c.norm(2))

t0= time.time()

c= torch.matmul(a, b)

t2= time.time()print(a.device, t2- t0, c.norm(2))